From Raw Market Feeds to Usable Insights: Building a Financial Data Pipeline

Abstract

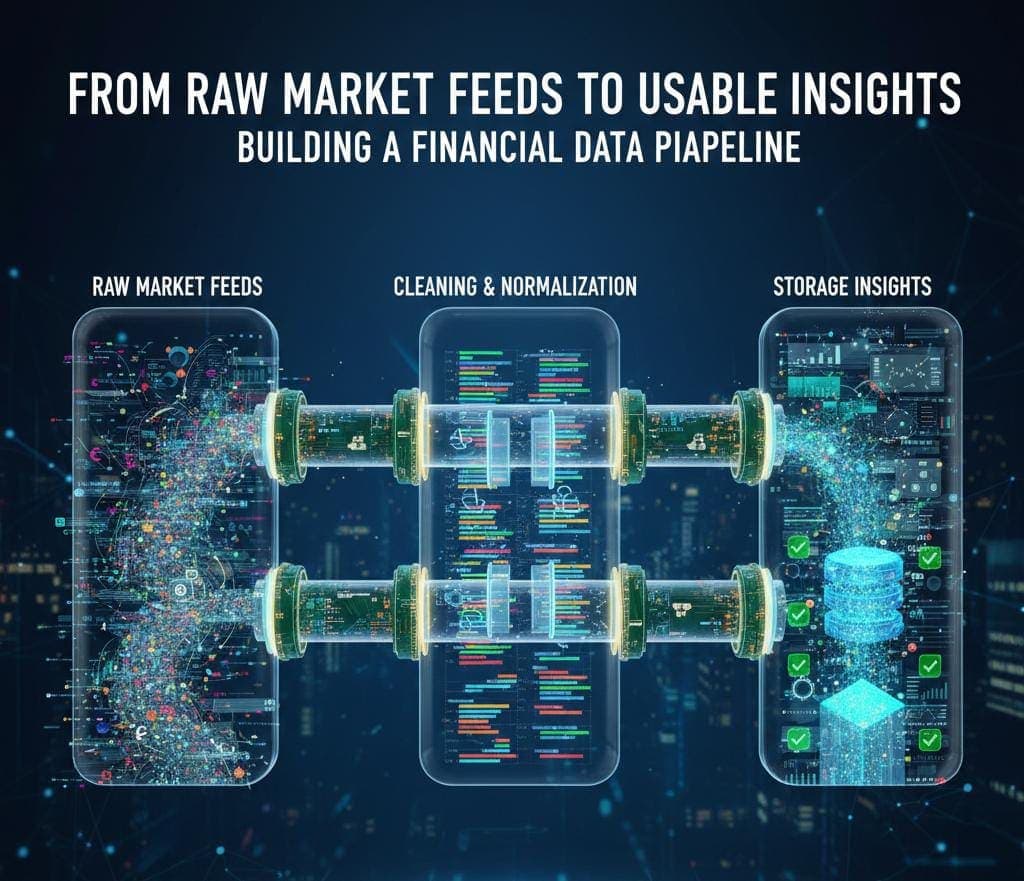

When the financial data is collected, it does not become useful instantly at that moment. The process of transforming raw market feeds into structured, reliable datasets using data pipelines is explained in this article.

ABSTRACT:

When the financial data is collected, it does not become useful instantly at that moment. The process of transforming raw market feeds into structured, reliable datasets using data pipelines is explained in this article which further highlights considerations of designs and key challenges involved in the process.

INTRODUCTION:

Data is continuously generated from multiple sources like exchanges, APIs, alternative data providers and web platforms. Unpredictable corporate actions like update of prices in milliseconds and real time evolution of market news can occur. However, in its original form raw financial data is rarely ready for decision-making or direct analysis.

Before building any risk model, strategy of trading and analytical insights, proper passage of this data must be ensured through a well-designed pipeline. Noisy, fragmented and inconsistent inputs are transformed into trustworthy and standardized datasets through these pipelines. Errors at this stage can propagate downstream, which may further lead to flawed financial decisions and misleading insights.

All the phases in the lifecycle of financial data – from ingestion to validation and other designs and challenges required for consideration when a robust financial data pipeline is built, are explored in this article.

RAW FINANCIAL DATA AND ITS SOURCES:

There can be several sources from which financial data originates. Each source has their own structures and limitations. APIs provided by data providers deliver fundamentals, historical prices and corporate actions whereas volume data and high-frequency prices are provided by exchange feeds. Social media sentiment, news feeds and macroeconomic releases which constitute alternative data sources further add complexity, despite increasing complexity.

These sources differ not only in formats but also in symbol definitions, conventions of timestamps and frequencies of updates. If they are combined without handling carefully, inconsistencies that distort analytical outcomes will be obtained as consequences. Whether a data source is reliable or not requires understanding the nature of the data source and this is the first step of a reliable data pipeline.

CHALLENGES OF STREAMING AND INGESTION OF DATA:

Collection of data in batch mode or real time is done by the ingestion layer. Arrival of market feeds happen at high velocity, particularly in high frequency or intraday environments. Common challenges during ingestion consist of duplicate messages, delayed updates or packets that are missing.

Fault tolerance must be a major property of streaming architectures which must be capable of handling data bursts while ensuring that no loss occurs. Several factors become critical at this stage which are required to preserve data integrity. These factors consist of maintaining latency constraints, managing failover mechanisms and ensuring message ordering.

NORMALIZATION AND CLEANING OF DATA:

Incorrect prices, missing values, record that are duplicated and symbols that are inconsistent are common constituents of raw financial data. Splits of stock, mergers, dividends and changes of ticker result in more complexity that should be handled carefully.

Data from different sources should follow a unified schema and this is ensured by normalization which includes aligning formats of price, standardizing timestamps across time zones and mapping symbols consistently over time. Simple analytical queries can result in misleading consequences in absence of this step.

STORAGE AND MODELING OF DATA:

After cleaning, financial data must be stored in a way that supports both real-time access and historical analysis. Depending on latency and query requirements, warehouses of data, time-series databases and lakes of data find the most common use.

Properly modelling the data is extremely important. To ensure accurate reconstruction of the previous market states by models and analysts, time partitioning, indexing key identifiers and maintaining historical versions of records are extremely important tasks. Scalability gets limited and analytical workflows get slowed down due to poor storage design.

QUALITY CHECKS AND VALIDATION:

The final safeguard in the pipeline is validation. The falling of price within logical ranges, consistent volumes and correct alignment of timestamps are ensured by this stage. Anomalies can be detected by cross checking against reference data, before they reach the systems present in the downstream level.

In finance, trading decisions or risk calculations can be influenced by even minor data errors and consequently automated quality checks are very important. Data quality is continuously monitored by reliable pipelines rather than treating validation as a one-time process.

DESIGN CHALLENGES FACED BY COMMON PIPELINE:

Speed, scalability and accuracy must be balanced by financial data pipelines. Low latency is often prioritized by high-frequency systems whereas historical accuracy and completeness are emphasised by pipelines that are research oriented. It is often impractical to design a single pipeline that satisfies all use cases.

Transparency and reproducibility are demanded by additional regulatory requirements. Data lineage must be tracked by pipelines to provide clear audit trails which further ensures that analytical results can be traced back to their original sources.

CONCLUSION:

A foundational step in any financial system is transforming raw market feeds into usable insights. Data pipelines that are well-designed ensure the accuracy and consistency of the financial data to ensure that it is ready for analysis before reaching models or decision-makers. Each stage plays a critical role in maintaining the integrity of data, from ingestion and cleaning to storage and validation.

The importance of robust data pipelines increases with increasing speed and data-driven nature of financial markets. Investing in strong pipeline architecture not only reduces analytical risks but also enables better strategies, more reliable insights and informed financial decisions.